K3S Setup

Description

Virtualized Kubernetes cluster based on K3S on Debian 11.

Features:

- IPv4/IPv6 dual stack network (Callico)

- Load balancer (MetalLB)

- RWO/RWX Storage with Longhorn

Requirements

One Debian VM as master node and at least 3 worker nodes for Longhorn. Master Node specifications:

- 2 Cores

- 4 GB RAM

- 32 GB Root Disk

App Node specifications:

- 4 Cores

- 8 GB RAM

- 32 GB Root Disk

- Second Disk for Longhorn

(in my case one dedicated 1 TB SSD per Node)

Network

Node Network:

- Network for Node IP's

- Recommended size

- IPv4 /24

- IPv6 /64

- Example

- IPv4: 10.0.3.0/24

- IPv6: 2001:780:250:5::/64

Service Network:

- Network for Service IP's

- IPv6 max Size /108

- Recommended size

- IPv4 /16

- IPv6 /108

- Example

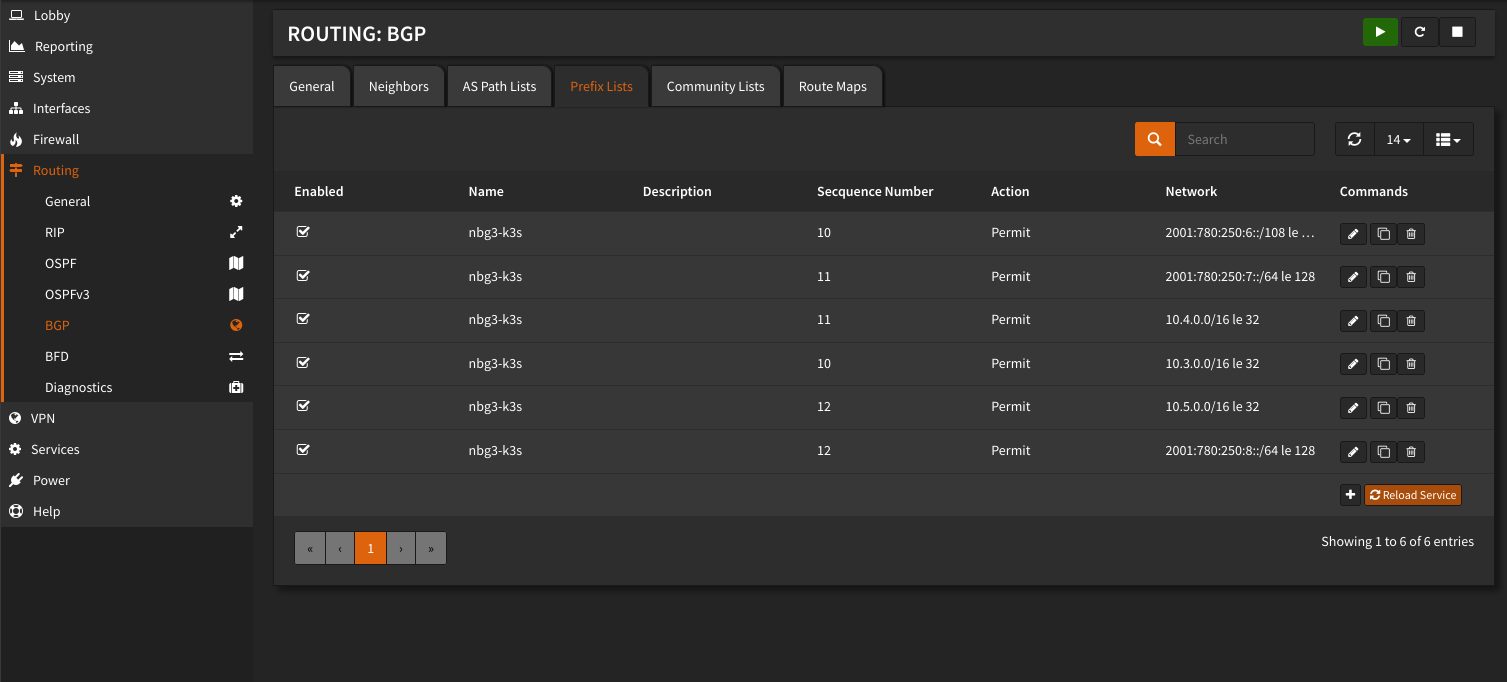

- IPv4: 10.3.0.0/16

- IPv6: 2001:780:250:6::/108

Cluster Network:

- Network for Pod IP's

- Recommended size

- IPv4 /16

- IPv6 /64

- Example

- IPv4: 10.4.0.0/16

- IPv6: 2001:780:250:7::/64

Loadbalancer Network:

- Network for Loadbalancer (MetalLB)

- Example

- IPv4: 10.5.0.0/16

- IPv6: 2001:780:250:8::/64

Router or Firewall with BGP Support, in my case OPNSense.

Setup

Install requiered Software

The K3S Binary is required for the K3S cluster and calicoctl for the network.

curl -L https://github.com/k3s-io/k3s/releases/latest/download/k3s -o /usr/local/bin/k3s

curl -L https://github.com/projectcalico/calico/releases/latest/download/calicoctl-linux-amd64 -o /usr/local/bin/kubectl-calico

chmod +x /usr/local/bin/{k3s,kubectl-calico}

curl -L https://get.k3s.io/ -o install.sh

Additional Setting

Set higher fs Notifier limit

Add the line to /etc/sysctl.conf

Setup Private Registry (optional)

To use a Harbor as a proxy cache, create the following config file under /etc/rancher/k3s/registries.yaml

mirrors:

docker.io:

endpoint:

- "https://harbor.example.net/v2/dockerhub"

harbor.example.net:

endpoint:

- "https://harbor.example.net"

harbor.example.net with the name of your Harbor instance.

Replace dockerhub with the Name of your Proxy Cache Project.

Setup K3S Server (master Node)

Create K3S Config file under /etc/rancher/k3s/config.yaml

node-ip: 10.0.3.11,2001:780:250:5::11

service-cidr: 10.3.0.0/16,2001:780:250:6::/108 # service-network

cluster-cidr: 10.4.0.0/16,2001:780:250:7::/64 # pod-network

disable-network-policy: true

# Gloabal-DNS

cluster-domain: k3s.example.net # default `cluster.local`

# calico

flannel-backend: none

# to disable traefik ingress controler

#disable: traefik

Create Cluster

INSTALL_K3S_SKIP_DOWNLOAD=true ./install.sh

Setup K3S Agent (app Nodes)

Create K3S Config file under /etc/rancher/k3s/config.yaml

Get Token from Server Node under /var/lib/rancher/k3s/server/node-token

Join Cluster

INSTALL_K3S_SKIP_DOWNLOAD=true K3S_URL="https://[2001:780:250:5::11]:6443" K3S_TOKEN=<Token from Server Node> ./install.sh

Setup Network

Configure BGP on OPNSense

Install os-frr Plugin under System > Firmware > Plugin

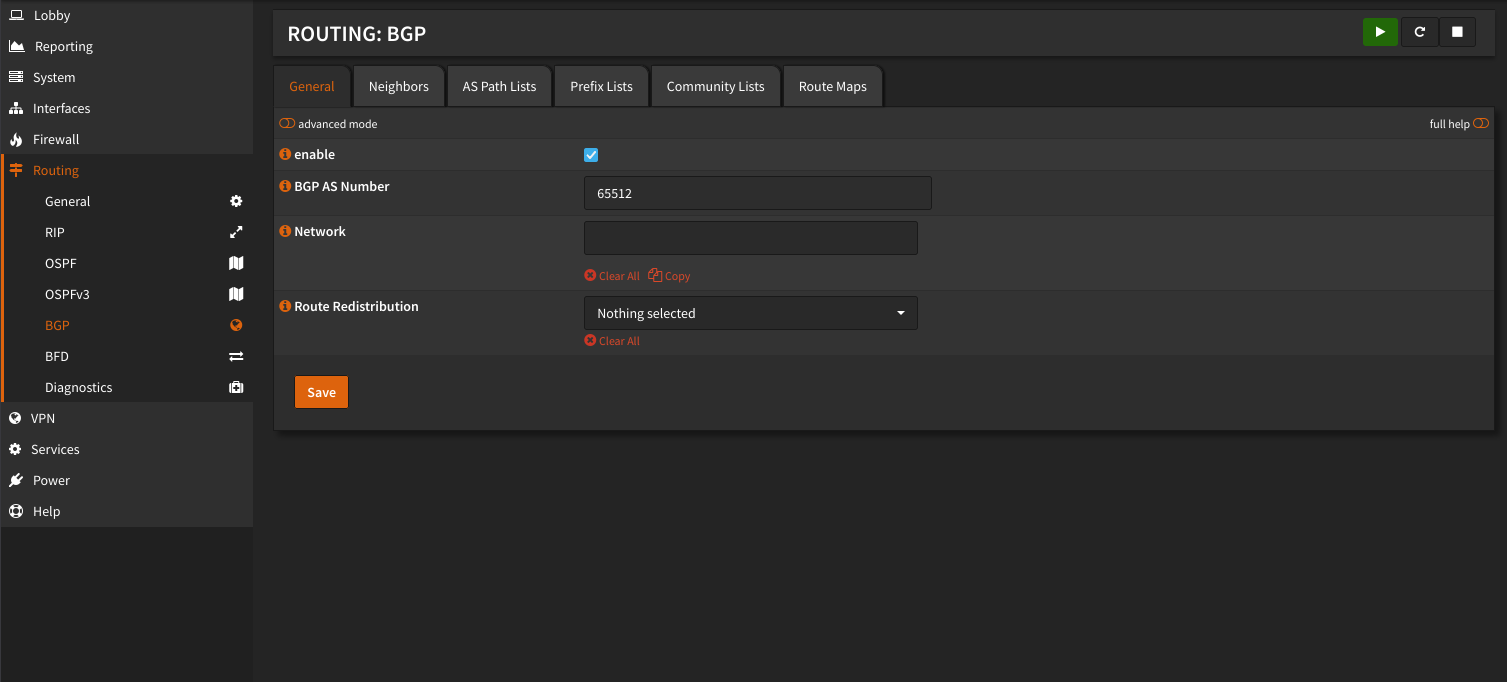

Under Routing > General set the hook at enable

Configure BGP Number under Routing > BGP to a Privat AS (64.512 – 65.534) in my case 65512

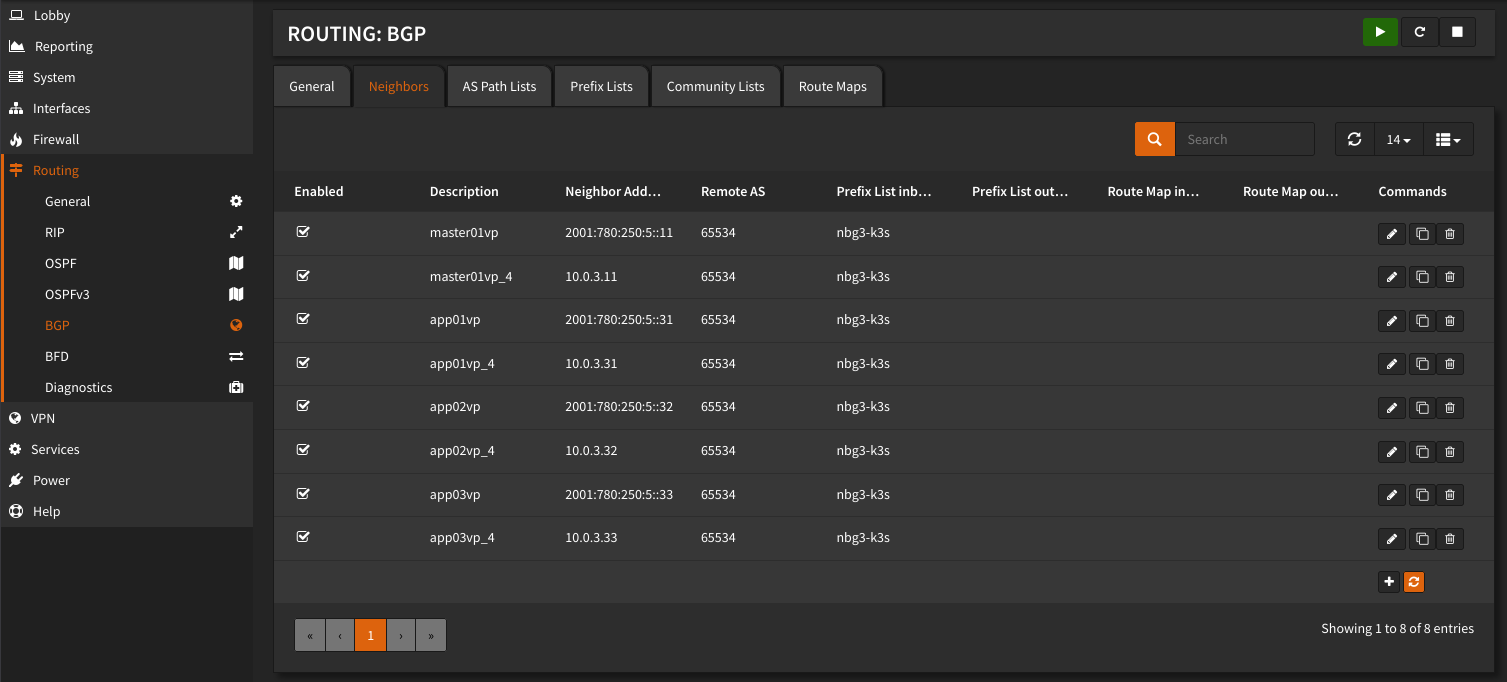

Create a Neighbore entry for every K3S Node.

To prevent the K3S Cluster from anouncing other Networks then the defined, create a Prefix list with all K3S networks. After creating the Prefix List set the list for every Neighbor entry.

Install Calico

Install Calico in the Cluster

kubectl apply -f https://projectcalico.docs.tigera.io/manifests/calico.yaml

Create Calico BGP configuration with kubectl calico apply -f calico-bgp.yaml

---

apiVersion: projectcalico.org/v3

kind: BGPConfiguration

metadata:

name: default

spec:

logSeverityScreen: Info

nodeToNodeMeshEnabled: true

asNumber: 65534

serviceClusterIPs:

- cidr: 10.3.0.0/16

- cidr: 2001:780:250:6::/108

serviceExternalIPs:

- cidr: 10.3.0.0/16

- cidr: 2001:780:250:6::/108

# Metallb

serviceLoadBalancerIPs:

- cidr: 10.5.0.0/16

- cidr: 2001:780:250:8::/64

---

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: fw01

spec:

peerIP: 2001:780:250:5::1

#peerIP: 10.0.3.1

asNumber: 65512

keepOriginalNextHop: true

---

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: fw01-4

spec:

peerIP: 10.0.3.1

asNumber: 65512

keepOriginalNextHop: true

Create IPPools with kubectl calico apply -f calico-pools.yaml

---

apiVersion: crd.projectcalico.org/v1

kind: IPPool

metadata:

name: default-ipv4-ippool

spec:

cidr: 10.4.0.0/16

---

apiVersion: crd.projectcalico.org/v1

kind: IPPool

metadata:

name: default-ipv6-ippool

spec:

cidr: 2001:780:250:7::/64

Install MetalLB

Install MetalLB in the Cluster with Helm and Helmfile by executing helmfile apply

repositories:

- name: metallb

url: https://metallb.github.io/metallb

releases:

- name: metallb

namespace: metallb-system

createNamespace: true

chart: metallb/metallb

version: 0.13.7

values:

- speaker:

enabled: false

Create MetalLB AddressPool

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: default

namespace: metallb-system

spec:

addresses:

- 10.5.0.0/16

- 2001:780:250:8::/64

avoidBuggyIPs: true

Update

K3s

Update the master nodes first. When this is done. One worker node can be updated after the other.

curl https://github.com/k3s-io/k3s/releases/download/v1.24.8%2Bk3s1/k3s -sLo k3s; cp k3s /usr/local/bin/; service k3s-agent restart

Calico

Simple reinstall the Kubernetes Manifest

MetalLB

Use the newest Helm Chart version and run helmfile apply again.